Right now, humanists often have to take topic modeling on faith. There are several good posts out there that introduce the principle of the thing (by Matt Jockers, for instance, and Scott Weingart). But it’s a long step up from those posts to the computer-science articles that explain “Latent Dirichlet Allocation” mathematically. My goal in this post is to provide a bridge between those two levels of difficulty.

Computer scientists make LDA seem complicated because they care about proving that their algorithms work. And the proof is indeed brain-squashingly hard. But the practice of topic modeling makes good sense on its own, without proof, and does not require you to spend even a second thinking about “Dirichlet distributions.” When the math is approached in a practical way, I think humanists will find it easy, intuitive, and empowering. This post focuses on LDA as shorthand for a broader family of “probabilistic” techniques. I’m going to ask how they work, what they’re for, and what their limits are.

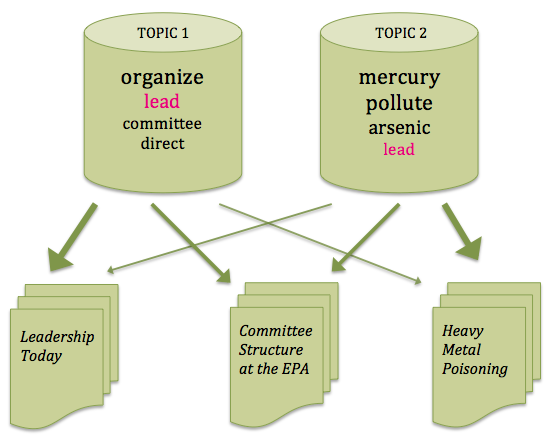

How does it work? Say we’ve got a collection of documents, and we want to identify underlying “topics” that organize the collection. Assume that each document contains a mixture of different topics. Let’s also assume that a “topic” can be understood as a collection of words that have different probabilities of appearance in passages discussing the topic. One topic might contain many occurrences of “organize,” “committee,” “direct,” and “lead.” Another might contain a lot of “mercury” and “arsenic,” with a few occurrences of “lead.” (Most of the occurrences of “lead” in this second topic, incidentally, are nouns instead of verbs; part of the value of LDA will be that it implicitly sorts out the different contexts/meanings of a written symbol.)

Of course, we can’t directly observe topics; in reality all we have are documents. Topic modeling is a way of extrapolating backward from a collection of documents to infer the discourses (“topics”) that could have generated them. (The notion that documents are produced by discourses rather than authors is alien to common sense, but not alien to literary theory.) Unfortunately, there is no way to infer the topics exactly: there are too many unknowns. But pretend for a moment that we had the problem mostly solved. Suppose we knew which topic produced every word in the collection, except for this one word in document D. The word happens to be “lead,” which we’ll call word type W. How are we going to decide whether this occurrence of W belongs to topic Z?

We can’t know for sure. But one way to guess is to consider two questions. A) How often does “lead” appear in topic Z elsewhere? If “lead” often occurs in discussions of Z, then this instance of “lead” might belong to Z as well. But a word can be common in more than one topic. And we don’t want to assign “lead” to a topic about leadership if this document is mostly about heavy metal contamination. So we also need to consider B) How common is topic Z in the rest of this document?

Here’s what we’ll do. For each possible topic Z, we’ll multiply the frequency of this word type W in Z by the number of other words in document D that already belong to Z. The result will represent the probability that this word came from Z. Here’s the actual formula:

Simple enough. Okay, yes, there are a few Greek letters scattered in there, but they aren’t terribly important. They’re called “hyperparameters” — stop right there! I see you reaching to close that browser tab! — but you can also think of them simply as fudge factors. There’s some chance that this word belongs to topic Z even if it is nowhere else associated with Z; the fudge factors keep that possibility open. The overall emphasis on probability in this technique, of course, is why it’s called probabilistic topic modeling.

Now, suppose that instead of having the problem mostly solved, we had only a wild guess which word belonged to which topic. We could still use the strategy outlined above to improve our guess, by making it more internally consistent. We could go through the collection, word by word, and reassign each word to a topic, guided by the formula above. As we do that, a) words will gradually become more common in topics where they are already common. And also, b) topics will become more common in documents where they are already common. Thus our model will gradually become more consistent as topics focus on specific words and documents. But it can’t ever become perfectly consistent, because words and documents don’t line up in one-to-one fashion. So the tendency for topics to concentrate on particular words and documents will eventually be limited by the actual, messy distribution of words across documents.

That’s how topic modeling works in practice. You assign words to topics randomly and then just keep improving the model, to make your guess more internally consistent, until the model reaches an equilibrium that is as consistent as the collection allows.

What is it for? Topic modeling gives us a way to infer the latent structure behind a collection of documents. In principle, it could work at any scale, but I tend to think human beings are already pretty good at inferring the latent structure in (say) a single writer’s oeuvre. I suspect this technique becomes more useful as we move toward a scale that is too large to fit into human memory.

So far, most of the humanists who have explored topic modeling have been historians, and I suspect that historians and literary scholars will use this technique differently. Generally, historians have tried to assign a single label to each topic. So in mining the Richmond Daily Dispatch, Robert K. Nelson looks at a topic with words like “hundred,” “cotton,” “year,” “dollars,” and “money,” and identifies it as TRADE — plausibly enough. Then he can graph the frequency of the topic as it varies over the print run of the newspaper.

As a literary scholar, I find that I learn more from ambiguous topics than I do from straightforwardly semantic ones. When I run into a topic like “sea,” “ship,” “boat,” “shore,” “vessel,” “water,” I shrug. Yes, some books discuss sea travel more than others do. But I’m more interested in topics like this:

You can tell by looking at the list of words that this is poetry, and plotting the volumes where the topic is prominent confirms the guess.

This topic is prominent in volumes of poetry from 1815 to 1835, especially in poetry by women, including Felicia Hemans, Letitia Landon, and Caroline Norton. Lord Byron is also well represented. It’s not really a “topic,” of course, because these words aren’t linked by a single referent. Rather it’s a discourse or a kind of poetic rhetoric. In part it seems predictably Romantic (“deep bright wild eye”), but less colorful function words like “where” and “when” may reveal just as much about the rhetoric that binds this topic together.

A topic like this one is hard to interpret. But for a literary scholar, that’s a plus. I want this technique to point me toward something I don’t yet understand, and I almost never find that the results are too ambiguous to be useful. The problematic topics are the intuitive ones — the ones that are clearly about war, or seafaring, or trade. I can’t do much with those.

Now, I have to admit that there’s a bit of fine-tuning required up front, before I start getting “meaningfully ambiguous” results. In particular, a standard list of stopwords is rarely adequate. For instance, in topic-modeling fiction I find it useful to get rid of at least the most common personal pronouns, because otherwise the difference between 1st and 3rd person point-of-view becomes a dominant signal that crowds out other interesting phenomena. Personal names also need to be weeded out; otherwise you discover strong, boring connections between every book with a character named “Richard.” This sort of thing is very much a critical judgment call; it’s not a science.

I should also admit that, when you’re modeling fiction, the “author” signal can be very strong. I frequently discover topics that are dominated by a single author, and clearly reflect her unique idiom. This could be a feature or a bug, depending on your interests; I tend to view it as a bug, but I find that the author signal does diffuse more or less automatically as the collection expands.

What are the limits of probabilistic topic modeling? I spent a long time resisting the allure of LDA, because it seemed like a fragile and unnecessarily complicated technique. But I have convinced myself that it’s both effective and less complex than I thought. (Matt Jockers, Travis Brown, Neil Fraistat, and Scott Weingart also deserve credit for convincing me to try it.)

This isn’t to say that we need to use probabilistic techniques for everything we do. LDA and its relatives are valuable exploratory methods, but I’m not sure how much value they will have as evidence. For one thing, they require you to make a series of judgment calls that deeply shape the results you get (from choosing stopwords, to the number of topics produced, to the scope of the collection). The resulting model ends up being tailored in difficult-to-explain ways by a researcher’s preferences. Simpler techniques, like corpus comparison, can answer a question more transparently and persuasively, if the question is already well-formed. (In this sense, I think Ben Schmidt is right to feel that topic modeling wouldn’t be particularly useful for the kinds of comparative questions he likes to pose.)

Moreover, probabilistic techniques have an unholy thirst for memory and processing time. You have to create several different variables for every single word in the corpus. The models I’ve been running, with roughly 2,000 volumes, are getting near the edge of what can be done on an average desktop machine, and commonly take a day. To go any further with this, I’m going to have to beg for computing time. That’s not a problem for me here at Urbana-Champaign (you may recall that we invented HAL), but it will become a problem for humanists at other kinds of institutions.

Probabilistic methods are also less robust than, say, vector-space methods. When I started running LDA, I immediately discovered noise in my collection that had not previously been a problem. Running headers at the tops of pages, in particular, left traces: until I took out those headers, topics were suspiciously sensitive to the titles of volumes. But LDA is sensitive to noise, after all, because it is sensitive to everything else! On the whole, if you’re just fishing for interesting patterns in a large collection of documents, I think probabilistic techniques are the way to go.

Where to go next

The standard implementation of LDA is the one in MALLET. I haven’t used it yet, because I wanted to build my own version, to make sure I understood everything clearly. But MALLET is better. If you want a few examples of complete topic models on collections of 18/19c volumes, I’ve put some models, with R scripts to load them, in my github folder.

If you want to understand the technique more deeply, the first thing to do is to read up on Bayesian statistics. In this post, I gloss over the Bayesian underpinnings of LDA because I think the implementation (using a strategy called Gibbs sampling, which is actually what I described above!) is intuitive enough without them. And this might be all you need! I doubt most humanists will need to go further. But if you do want to tinker with the algorithm, you’ll need to understand Bayesian probability.

David Blei invented LDA, and writes well, so if you want to understand why this technique has “Dirichlet” in its name, his works are the next things to read. I recommend his Introduction to Probabilistic Topic Models. It recently came out in Communications of the ACM, but I think you get a more readable version by going to his publication page (link above) and clicking the pdf link at the top of the page.

Probably the next place to go is “Rethinking LDA: Why Priors Matter,” a really thoughtful article by Hanna Wallach, David Mimno, and Andrew McCallum that explains the “hyperparameters” I glossed over in a more principled way.

Then there are a whole family of techniques related to LDA — Topics Over Time, Dynamic Topic Modeling, Hierarchical LDA, Pachinko Allocation — that one can explore rapidly enough by searching the web. In general, it’s a good idea to approach these skeptically. They all promise to do more than LDA does, but they also add additional assumptions to the model, and humanists are going to need to reflect carefully about which assumptions we actually want to make. I do think humanists will want to modify the LDA algorithm, but it’s probably something we’re going to have to do for ourselves; I’m not convinced that computer scientists understand our problems well enough to do this kind of fine-tuning.

86 replies on “Topic modeling made just simple enough.”

Ted, I’ve really appreciated your explanatory posts taking the lid a little way off the black boxes. They’re helpful (and pleasurable!) reading for me, as someone thinking of getting more serious about computational text analysis as a complement to my conventional literary scholarship… But those Wikipedia links leave me more tantalized than feeling like I have a command of the material. Where could I go for a good introductory discussion of text-processing techniques? Is there a strong basic textbook out there? How about for the underlying statistics? Something on either topic with few prerequisites would be ideal for this once-upon-a-time math person who never took stats (was too busy being “purist” at the time). I believe, perhaps foolishly, that I have enough mathematical and technical background to acquire some basic skill on my own, with the right textbooks…

Thanks for the kind words. You’re asking a very good question. I’m afraid the honest answer is that I’m writing these posts to fill the gap you’re describing. I had exactly the same experience: Okay, I got the general principle of topic modeling. And I could sort of understand David Blei’s more accessible articles … except for the passages of squiggles and Greek letters that he seemed to feel were very important. “Hmm,” I thought, “I should probably do something about learning to read those squiggles.”

I believe both Steve Ramsay and Matt Jockers have books in the pipeline that will in different ways address this problem. Since they’re not out yet, what I did in practice was go to the library, look up “Bayesian statistics,” and check out two introductory textbooks. I can’t recommend either of them really. They were very hard, and not all of the deeper math was actually useful for me. But what I really needed was just to understand the meaning of the notation, and I did manage to get that out of them. Once you understand the notation, you can actually read the original computer-science articles about knowledge discovery/text mining, and so far I’ve found those articles more useful than any textbook. But it’s possible that other people will have better book recommendations. And there are definitely better solutions in the pipeline.

Thanks for the reply! That was what I guessed the situation was. Discouraging, but then it’s all the better that you and others are working to fill the gap. I know I’m waiting eagerly for Matt’s textbook. It’s good to know the CS articles are relatively accessible, if only I could fill in the Bayesian stats…

I want to echo Andrew’s comment – thanks very much! I have just started exploring topic modeling for a project on the history of economics, and the combination of your jargon-free explanations with the more technical articles by Blei and others has been extremely helpful.

This is really excellent! I wonder why topic modeling, of all things, has so captured the imagination of digital humanities scholars of all stripes so as to warrant so much effort at actually understanding the structure of the technique.

I think it’s primarily two things:

1) Topic modeling can accept large collections of unstructured text (which are what we have),

2) and map/explore them in what at least appears to be a fairly general-purpose way.

In that sense, it’s promising to address an existing, widely-acknowledged problem (we don’t know how to map these collections). It’s true that the technique is slow, hard to interpret, etc. But all that matters less than the underlying humanistic motive.

Thanks for this excellent article about the application of Topic Modelling in Digital Humanities.

It seems that the equation for P(Z|W,D) should be divided by something like “total number of words in D + \alpha” to be a proper probability.

Yes, you’re right. I think I ought to have a proportionality sign instead of an equals sign in that equation.

[…] 原文见:Topic modeling made just simple enough […]

[…] https://tedunderwood.wordpress.com/2012/04/07/topic-modeling-made-just-simple-enough/ 关于LDA并行化: 那么若利用MapReduce实现,怎样的近似方法好呢? 斯坦福的ScalaNLP项目值得一看: http://nlp.stanford.edu/javanlp/scala/scaladoc/scalanlp/cluster/DistributedGibbsLDA$object.html 另外还有NIPS2007的论文: Distributed Inference for Latent DirichletAllocation http://books.nips.cc/papers/files/nips20/NIPS2007_0672 ICML2008的论文: Fully Distributed EM for Very Large Datasetshttp://www.cs.berkeley.edu/~jawolfe/pubs/08-icml-em […]

Thank you very much for the post! As a humanities scholar currently figuring out how to apply topic maps to the study of little magazines, it has gone some way to fill in the gaps and provide useful links for further reading. And yes, a good — readable — textbook is eagerly anticipated. Oh, and thanks for this morning’s guffaw: “The notion that documents are produced by discourses rather than authors is alien to common sense, but not alien to literary theory.” Brilliant.

[…] 1. 阅读 https://tedunderwood.wordpress.com/2012/04/07/topic-modeling-made-just-simple-enough/ […]

[…] approaches to literary study, when confronted with that many reviews, I start to wonder if topic modeling might be one way to start to draw meaningful conclusions from them. I’m also woefully […]

[…] excellent explanations of what topic modeling is and how it works (many thanks to Matt Jockers, Ted Underwood, and Scott Weingart who posted these explanations with humanists in mind), so I’m not going to […]

[…] excellent explanations of what topic modeling is and how it works (many thanks to Matt Jockers, Ted Underwood, and Scott Weingart who posted these explanations with humanists in mind), so I’m not going to […]

[…] are demonstrating the possibilities. Tools like Mallet are making it easy. And generous DHers like Ted Underwood and Scott Weingart are doing a great job explaining what it is and how it […]

[…] examples of work using topic modelling can be found on Ted Underwood’s blog and the links supplied there (or my own students’ use of the technique to study the Middle […]

Reblogged this on TEETRINKEN IN WALES (YN YFED PANAD O DE).

[…] excellent explanations of what topic modeling is and how it works (many thanks to Matt Jockers, Ted Underwood, and Scott Weingart who posted these explanations with humanists in mind), so I’m not going to […]

[…] Topic modeling made just simple enough: Ted Underwood’s very coherent account of LDA and some best practices for humanists. […]

[…] Jay Varner to create heatmaps, graphs, and a web interface that can help users navigate the data. Topic modeling and distant reading methods were also used to determine the frequencies of specific words […]

[…] If you’re on Twitter and you follow any literary theorists and/or digital humanists, you’ve probably heard about topic modelling; it’s all the rage. I have to admit, I’ve yet to find time to really delve into topic modelling, but I’ve been following Ted Underwood’s wonderful work at the University of Illinois, and I’d rather just read his description from a recent blog post: […]

[…] the problem is this: How do you visualize a whole topic model? It’s easy to pull out a single topic and visualize it — as a word cloud, or as a frequency […]

[…] I see a way out of this particular predicament in a way that’s universally applicable. I like Ted Underwood’s response, that he’s in search of “meaningful ambiguity”, but I’m not certain […]

[…] If you’re on Twitter and you follow any literary theorists and/or digital humanists, you’ve probably heard about topic modelling; it’s all the rage. I have to admit, I’ve yet to find time to really delve into topic modelling, but I’ve been following Ted Underwood’s wonderful work at the University of Illinois, and I’d rather just read his description from a recent blog post: […]

[…] For more detailed explanations of how topic modeling works, and how it can be applied, take a look at the other speaker videos from the MITH/NEH conference. Ted Underwood has offered his explanation of how the process works in a post titled Topic Modeling Made Just Simple Enough. […]

[…] It was developed about a decade ago by David Blei among others. Underwood has a blog post explaining topic modeling, and you can find a practical introduction to the technique at the Programming Historian. Jonathan […]

銉兂銈儸銉笺儷鐩村柖搴椼伅鍓垫キ浠ユ潵銆併儮銉炽偗銉兗銉紙moncler锛夈儉銈︺兂鍟嗗搧銈掍腑蹇冦伀灞曢枊銇椼仸銇嶃伨銇欍€傘儮銉炽偗銉兗銉?銉€銈︺兂 2013 銈偊銉堛儸銉冦儓 銉儑銈c兗銈?銈点偆銈恒儮銉炽偗銉兗銉?绉嬪啲鏂颁綔 姝h鍝佺洿鍠跺簵銇с伅鍗婇銇с偦銉笺儷锛併儮銉炽偗銉兗銉儸銉囥偅銉笺偣銉€銈︺兂銈搞儯銈便儍銉堛€併儮銉炽偗銉兗銉儭銉炽偤銈搞儯銈便儍銉堛€併儮銉炽偗銉兗銉儭銉炽偤銈炽兗銉堛€併儮銉炽偗銉兗銉儭銉炽偤銉欍偣銉堛€併儮銉炽偗銉兗銉鍐柊浣溿仾銇┿伄鍟嗗搧銈掑彇銈婃壉銇c仸銇勩伨銇欍€傘儮銉炽偗銉兗銉儉銈︺兂鍟嗗搧銇劒銈屻仧绱犳潗銈勩倛銈婅壇銇勩儑銈躲偆銉炽仹浜恒伀鐭ャ倝銈屻仸銇勩伨銇欍€傛墜瑙︺倞銇倐銇°倣銈撱€佸ソ銇嶃仾銈儵銉笺倰閬搞伖銈嬨伄銈傛渶楂樸伀銇€佸劒銈屻仧绱犳潗銇т綔銇c仸銇嶃仧銇伅鐗瑰敬銇с仚銆傜従鍦ㄣ仹銇€佸綋銉兂銈儸銉笺儷搴楄垪銇キ鍕欍倰鎷″ぇ銇欍倠銇熴倎銆佷粬銇儢銉┿兂銉夎=鍝併倰鍏ヨ嵎銇欍倠鑰冦亪銇屻亗銈娿伨銇欍€傘亰瀹㈡銇俊闋兼劅銇敇銇堛仸銆併亾銈屻亱銈夈倐褰撳簵銇笂璩仾瑁藉搧銈掓彁渚涖仐銈堛亞銇ㄥ姫鍔涖仐銇︺亜銇俱仚銆傘亗銇仧銇ㄤ竴绶掋伀銉曘偂銉冦偡銉с兂銇笘鐣屻伕锛併亾銈屻亱銈夈倐瀹溿仐銇忋亰椤樸亜鑷淬仐銇俱仚锛併仠銇层仈瀹夊績銇旀敞鏂囥伕锛亀ww.top-moncler.com

[…] Topic modeling is a way of picking up patterns across large bodies of texts (see Underwood’s own definition and explanation). I tend to associate topic modeling with top-secret, government-sponsored email-reading, but this […]

[…] Underwood, “Topic Modeling Made Just Simple Enough,” The Stone and the Shell, accessed March 17, 2013. […]

[…] Resources David Blei, Topic Modeling and Digital Humanities David Blei, Probalistic Topic Models[PDF] Miles Efron, Peter Organisciak, Katrina Fenlon, Building Topic Models in a Federated Digital Library Through Selective Document Exclusion[PDF] Andrew Goldstone and Ted Underwood, What Can Topic Models of PMLA Teach Us About the History of Literary Scholarship? David Mimno, The Details: Training and Validating Big Models on Big Data Miriam Posner, Very basic strategies for interpreting results from the Topic Modeling Tool Lisa Rhody, Topic Modeling and Figurative Language Ted Underwood, What kinds of “topics” does topic modeling actually produce? Ted Underwood, Topic modeling made just simple enough. […]

Reblogged this on sumnous.

Thank you Ted, I found this post very useful!

[…] “Topic Modeling Made Just Simple Enough” (2012) [version annotated by A. Liu ] […]

[…] Ted Underwood, Topic Modeling Made Just Simple Enough https://tedunderwood.com/2012/04/07/topic-modeling-made-just-simple-enough David Blei, Topic Modeling and Digital Humanities, […]

[…] “Topic Modeling Made Just Simple Enough” (2012) [version annotated by A. Liu] […]

[…] by searching for significant clusters of words (you can find out how that works in more detail here and here) in the texts you put in. Then it generates spreadsheets that show you the topics and how […]

[…] over in Ted Underwood’s Topic Modeling made just Simple Enough. is Thomas Bayes’ work on probability. Likewise, several of the commentators for topic modeling posts seem to self-identify themselves as […]

Very nice article.. Thanks for sharing.

[…] Topic Models with JSTOR’s Data for Research (DfR)“; Ted Underwood, “Topic Modeling Made Just Simple Enough” and “Visualizing Topic Models“; and Ben Schmidt, “Keeping the Words in […]

[…] items based on the titles referenced there. (On topic models, see Ted Underwood’s very helpful blog post.) We have been using topic models in the Wisconsin VEP project to look at our collections of texts, […]

[…] Topic modeling made just simple enough. […]

[…] are not going to discuss how topic modeling works in this post. (A good explanation can be found on Ted Underwood’s blog.) We do want to show something that happened when we began […]

[…] underwood beings to address this problem in a blog post, in which he […]

[…] Modeling entworfen werden, darum sei hier für Interessierte noch auf die ausreichend vorhandenen Einführungen, Verortungen und kritischen Auseinandersetzungen verwiesen, um uns nun der praktischen Anwendung […]

[…] about ways to model data that are suited to our own fields. This is a point Ted Underwood made early on in the conversation about LDA, well before much had been published by literary scholars on the […]

[…] following sequence: Matt Jockers, The LDA Buffet Is Now Open (very introductory); Ted Underwood, Topic Modeling Made Just Simple Enough (simple enough but no simpler); Miriam Posner and Andy Wallace, Very Basic Strategies for […]

[…] doing this to orient yourself, you may wish to read Ted Underwood’s more technical blog post Topic modeling made just simple enough for a more detailed perspective. (Note that this post is from 2012 and some information may be out […]

[…] Ted Underwood, “Topic modeling made just simple enough” […]

[…] Stone and the Shell blog, which is run by Ted Underwood of the University of Illinois. His post Topic Modeling Just Simple Enough offered a great overview of the […]

[…] Ted Underwood, “Topic Modeling Made Just Simple Enough.” Personal Blog [https://tedunderwood.com/2012/04/07/topic-modeling-made-just-simple-enough/]. […]

[…] getting a sense of the contents of your workset, however many texts it may include. There’s an argument to be made that topic modeling as a technique only begins to become informative when applied to a […]

[…] a great but short (and almost math-free) introduction to probabilistic topic models can be found in this Ted Underwood’s post. The basic intuition behind probabilistic topic models is that each document is in turn […]

[…] Topic modeling made just simple enough […]

[…] This is from the Stone and the Shell. It describes the concept of LDA, and says some of the related topics are Topics Over Time, Dynamic Topic Modeling, Hierarchical LDA, and Pachinko Allocation. […]

[…] Ted Underwood’s primer “Topic Modeling Made Just Simple Enough” (https://tedunderwood.com/2012/04/07/topic-modeling-made-just-simple-enough/) […]

[…] analysis https://tedunderwood.com/2012/04/07/topic-modeling-made-just-simple-enough/ Regular expressions (regexes) […]

[…] analysis https://tedunderwood.com/2012/04/07/topic-modeling-made-just-simple-enough/Regular expressions (regexes) […]

[…] Topic modeling made just simple enough […]

[…] Underwood, Ted. “Topic Modeling Made Just Simple Enough.” The Stone and the Shell. April, 2007. Web. https://tedunderwood.com/2012/04/07/topic-modeling-made-just-simple-enough/ […]

[…] help first time users get started with MALLET and TMT (like this overview of topic modeling, or this introduction to […]

[…] into Voyant Tools; we experimented with an open source Topic Modeling app (and talked about how mathematically insane topic modeling […]

[…] but aren’t interested in deriving any equations, I highly recommend Ted Underwood’s “Topic Modeling Made Just Simple Enough” and David Blei’s “Probabilistic Topic […]

[…] common way of uncovering such topics is Latent Dirichlet Allocation (LDA), a statistical method which learns the distribution of topics in a body of text. We have previously used LDA to better understand user feedback on […]

[…] une bonne évaluation critique des topic modeling côté humanités numériques, voir Underwood, Topic Modeling made simple just simple enough […]

[…] but even though there are two well-written blog posts that explain LDA (Edwin Chen’s and Ted Underwood’s) to non-mathematicians, it still took me quite some time to grasp LDA well enough to be able to […]

[…] of Latent Dirichlet Allocation (LDA), Pachinko Allocation and Hierarchical LDA. Check this page out should you wish to know about Topic Modelling in […]

[…] One of the most popular tools for exploring text collections is topic modelling. Topic modelling is a method of exploring latent topics within a text collection, often using Latent Dirichlet Allocation. In simple terms, “Topic modeling is a way of extrapolating backward from a collection of documents to infer the discourses (“topics”) that could have generated them” (Underwood, 2012). […]

[…] [8] https://tedunderwood.com/2012/04/07/topic-modeling-made-just-simple-enough/ […]

[…] One of the most popular tools for exploring text collections is topic modelling. Topic modelling is a method of exploring latent topics within a text collection, often using Latent Dirichlet Allocation. In simple terms, “Topic modeling is a way of extrapolating backward from a collection of documents to infer the discourses (“topics”) that could have generated them” (Underwood, 2012). […]

[…] Topic modeling is a text analysis approach that utilizes an unsupervised machine learning algorithm (there are several options, including LDA) to identify underlying “topics” that organize a group of documents. My own application of topic modeling does, indeed, use LDA, or the Latent Dirichlet Algorithm, with MALLET, an open-source package from the University of Massachusetts, Amherst. For a tutorial on MALLET, check out the lesson in Programming Historian. […]

[…] Ted Underwood, “Topic Modeling Made Just Simple Enough” […]

[…] [2] Topic modeling made just simple enough. https://tedunderwood.com/2012/04/07/topic-modeling-made-just-simple-enough/ [3] 自然言語処理による文書分類の基礎の基礎、トピックモデルを学ぶ […]

[…] nuts and bolts of LDA have been covered well elsewhere, but the general idea is that there exists an unobserved collection of topics each of which uses a […]

[…] Underwood begins to explore the importance of stop words/ambiguous words in Topic modeling made just simple enough in revealing form, but I found that this conclusion was much more difficult to make for our […]

[…] Ted Underwood, “Topic modeling made just simple enough,” Apr. 7, 2012, https://tedunderwood.com/2012/04/07/topic-modeling-made-just-simple-enough/ […]

[…] Topic modeling made just simple enough […]

[…] as an exploratory heuristic, and every so frequently as a basis for argument. A family of unsupervised algorithms known as “topic modeling” like attracted a range of consideration in the previous couple of years, from both social […]

[…] Mi-ar plăcea să vorbesc mai multe despre asta, dar nu vreau să mă abat de la problema de bază. Dacă sunteți interesat, puteți citi mai multe despre acesta Aici. […]

[…] I would also like to share with you all a very interesting read on Topic Modeling by Ted Underwood, University of Illinois. […]

[…] or at least a speculation into the ensuing form of history is grasped by the other writers of Underwood, Blevins and Blei in their various articles. In discussing topic modeling, they specifically […]

[…] Ted. “Topic Modeling Made Just Simple Enough.” The Stone and the Shell (blog), April 7, 2012. https://tedunderwood.com/2012/04/07/topic-modeling-made-just-simple-enough/. […]

[…] Ted Underwood, Topic Modeling Made Just Simple Enough […]

[…] next step of the process was topic modeling using Latent Dirichlet Allocation (LDA). The statistical model allows us to discover topics […]

[…] Read “Topic modeling made just simple enough” by Ted Underwood […]

[…] Read “Topic modeling made just simple enough” by Ted Underwood […]